Accelerating Autonomous Deployment for Global OEMs

From Munich to the Middle East and Beyond

Real-Time Perception Validation for Autonomous Systems

Accelerating autonomous deployment for perception-driven systems. From Munich to complex urban environments and beyond.

We redefine how LiDAR- and camera-based perception systems are developed and validated by building real-time, edge-native annotation and verification infrastructure. Our platform enables continuous perception validation, faster iteration cycles, and safer deployment across diverse operating conditions — bridging simulation, testing, and real-world operation.

Accelerating autonomous deployment for perception-driven systems. From Munich to complex urban environments and beyond.

We redefine how LiDAR- and camera-based perception systems are developed and validated by building real-time, edge-native annotation and verification infrastructure. Our platform enables continuous perception validation, faster iteration cycles, and safer deployment across diverse operating conditions — bridging simulation, testing, and real-world operation.

Accelerating autonomous deployment for perception-driven systems. From Munich to complex urban environments and beyond.

We redefine how LiDAR- and camera-based perception systems are developed and validated by building real-time, edge-native annotation and verification infrastructure. Our platform enables continuous perception validation, faster iteration cycles, and safer deployment across diverse operating conditions — bridging simulation, testing, and real-world operation.

Autonomous Mobility

Tele-Operations

Data Infrastructure

AI & Simulation

Safety Systems

5G Connectivity

Smart Cities

Fleet Management

Mapping & Localization

Edge Computing

Autonomous Mobility

Tele-Operations

Data Infrastructure

AI & Simulation

Safety Systems

5G Connectivity

Smart Cities

Fleet Management

Mapping & Localization

Edge Computing

Autonomous Mobility

Tele-Operations

Data Infrastructure

AI & Simulation

Safety Systems

5G Connectivity

Smart Cities

Fleet Management

Mapping & Localization

Edge Computing

Real-Time Perception Gap

Less than 5% of perception validation today happens in real time at the edge. Most autonomous systems rely on offline logs and delayed annotation, leaving critical perception failures undiscovered until late in development or deployment. This creates blind spots in safety validation and slows iteration cycles.

Real-Time Perception Gap

Less than 5% of perception validation today happens in real time at the edge. Most autonomous systems rely on offline logs and delayed annotation, leaving critical perception failures undiscovered until late in development or deployment. This creates blind spots in safety validation and slows iteration cycles.

Real-Time Perception Gap

Less than 5% of perception validation today happens in real time at the edge. Most autonomous systems rely on offline logs and delayed annotation, leaving critical perception failures undiscovered until late in development or deployment. This creates blind spots in safety validation and slows iteration cycles.

Latency & Feedback Delay

Over 70% of perception issues are detected only after post-processing, due to cloud-only pipelines and manual annotation workflows. The lack of immediate feedback prevents rapid debugging, limits on-vehicle learning, and increases the cost and risk of real-world testing.

Latency & Feedback Delay

Over 70% of perception issues are detected only after post-processing, due to cloud-only pipelines and manual annotation workflows. The lack of immediate feedback prevents rapid debugging, limits on-vehicle learning, and increases the cost and risk of real-world testing.

Latency & Feedback Delay

Over 70% of perception issues are detected only after post-processing, due to cloud-only pipelines and manual annotation workflows. The lack of immediate feedback prevents rapid debugging, limits on-vehicle learning, and increases the cost and risk of real-world testing.

European Urban Complexity

European cities exhibit 100+ unique combinations of road layouts, tram integrations, mixed traffic rules, and signage standards. Without continuous, city-specific perception validation, autonomy stacks trained elsewhere struggle to generalize reliably to dense urban environments like Munich.

European Urban Complexity

European cities exhibit 100+ unique combinations of road layouts, tram integrations, mixed traffic rules, and signage standards. Without continuous, city-specific perception validation, autonomy stacks trained elsewhere struggle to generalize reliably to dense urban environments like Munich.

European Urban Complexity

European cities exhibit 100+ unique combinations of road layouts, tram integrations, mixed traffic rules, and signage standards. Without continuous, city-specific perception validation, autonomy stacks trained elsewhere struggle to generalize reliably to dense urban environments like Munich.

Our Vision

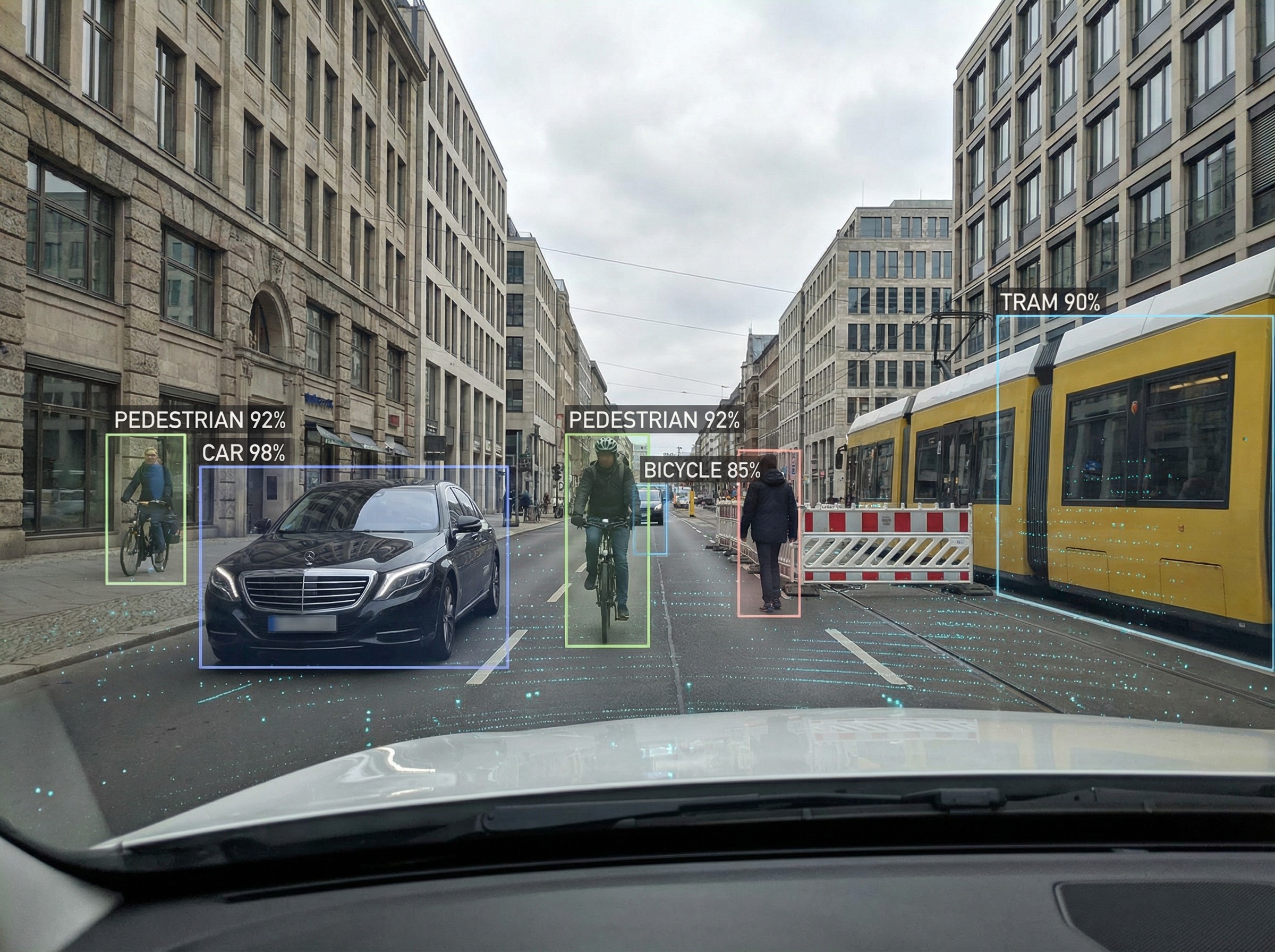

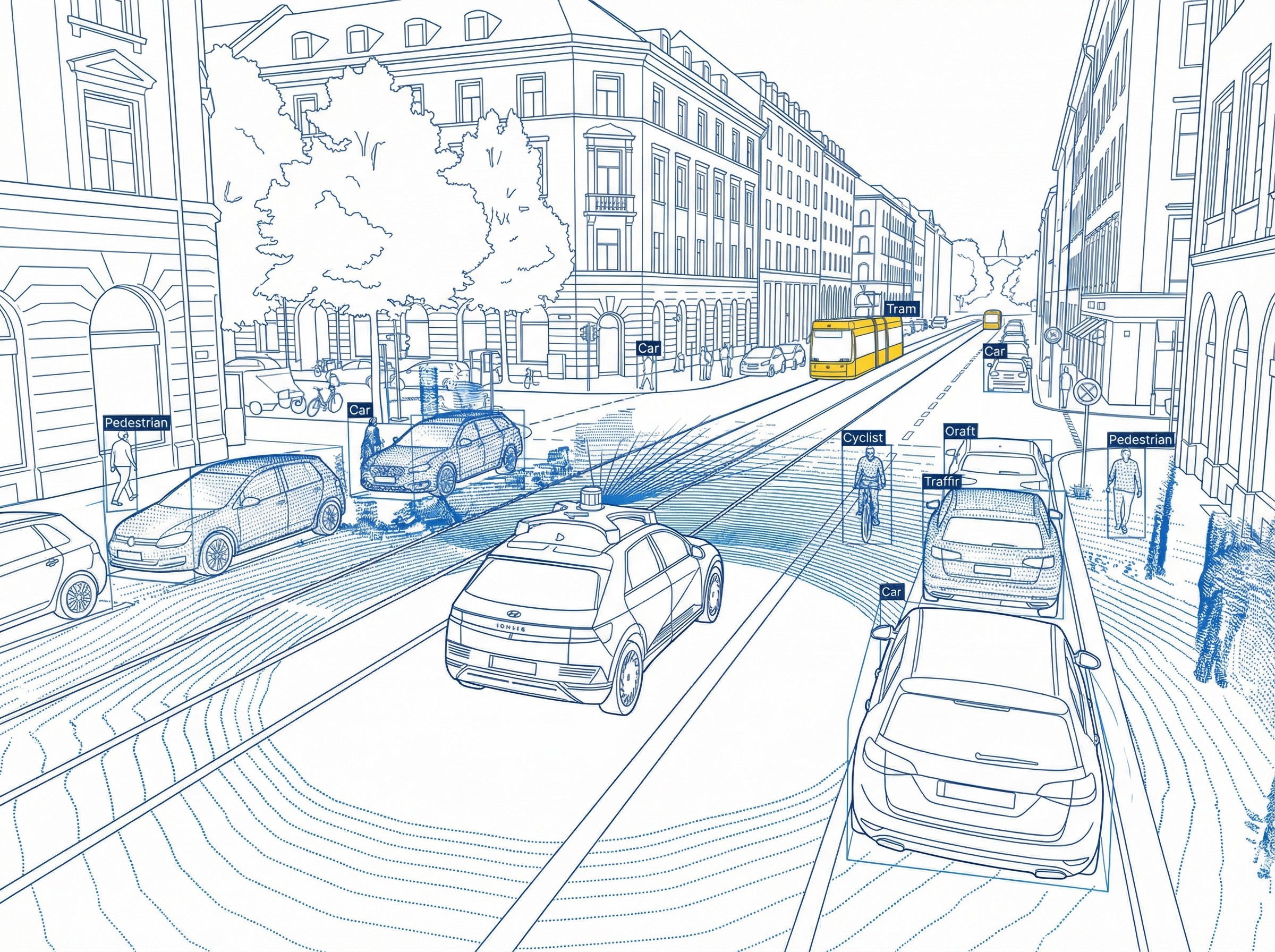

Real-time perception validation at the edge

This view represents a live perception validation platform used to observe, verify, and stress-test production-grade LiDAR and camera perception systems directly in real-world environments. The overlay illustrates how objects, geometry, and semantics are detected and validated in real time, enabling continuous feedback on perception quality, failure modes, and system confidence. By moving annotation and validation closer to the vehicle — at the edge — ReDrive enables faster iteration, earlier detection of perception weaknesses, and safer deployment across complex urban environments. This bridges the gap between simulation, offline datasets, and real-world operation.

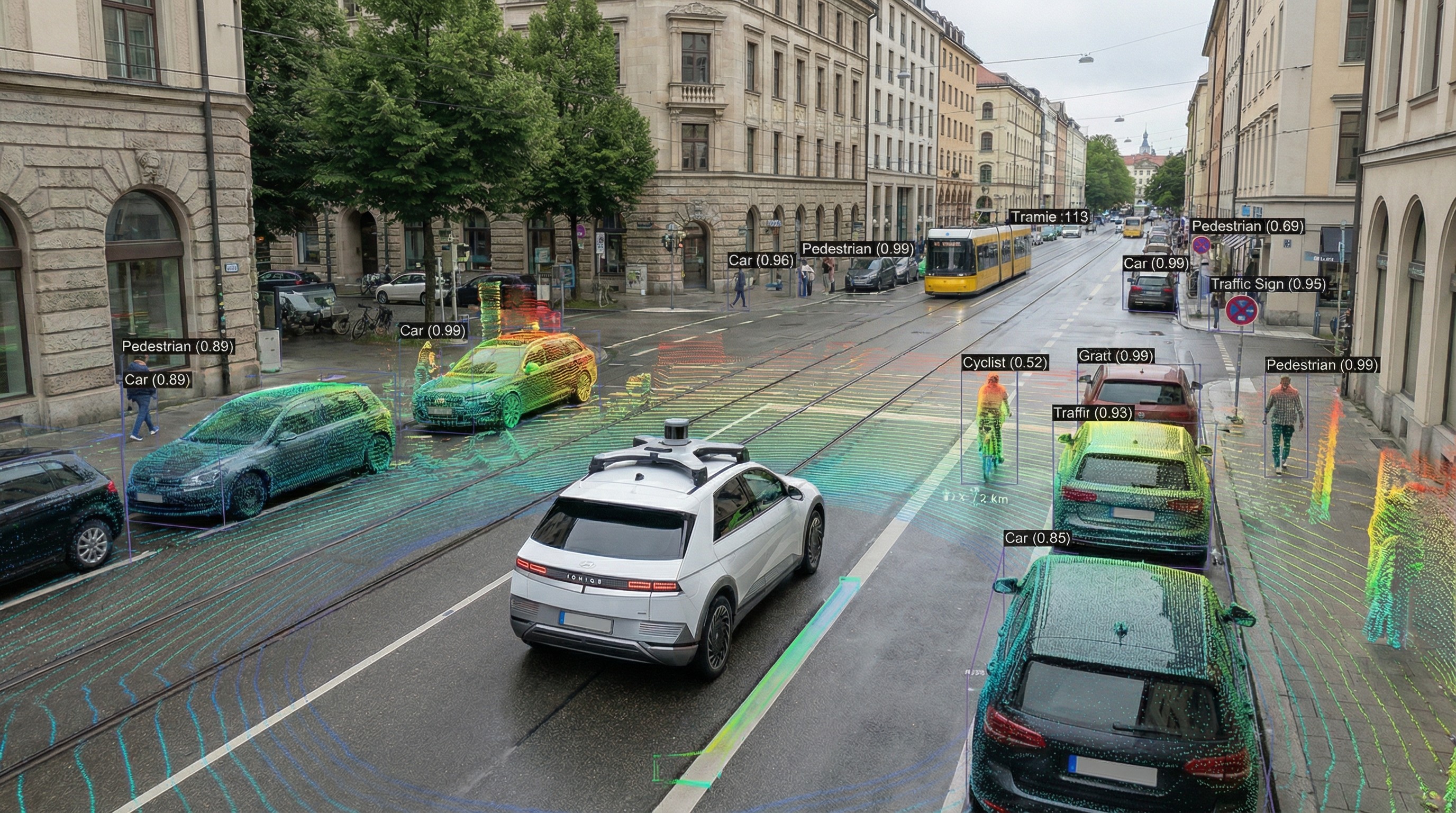

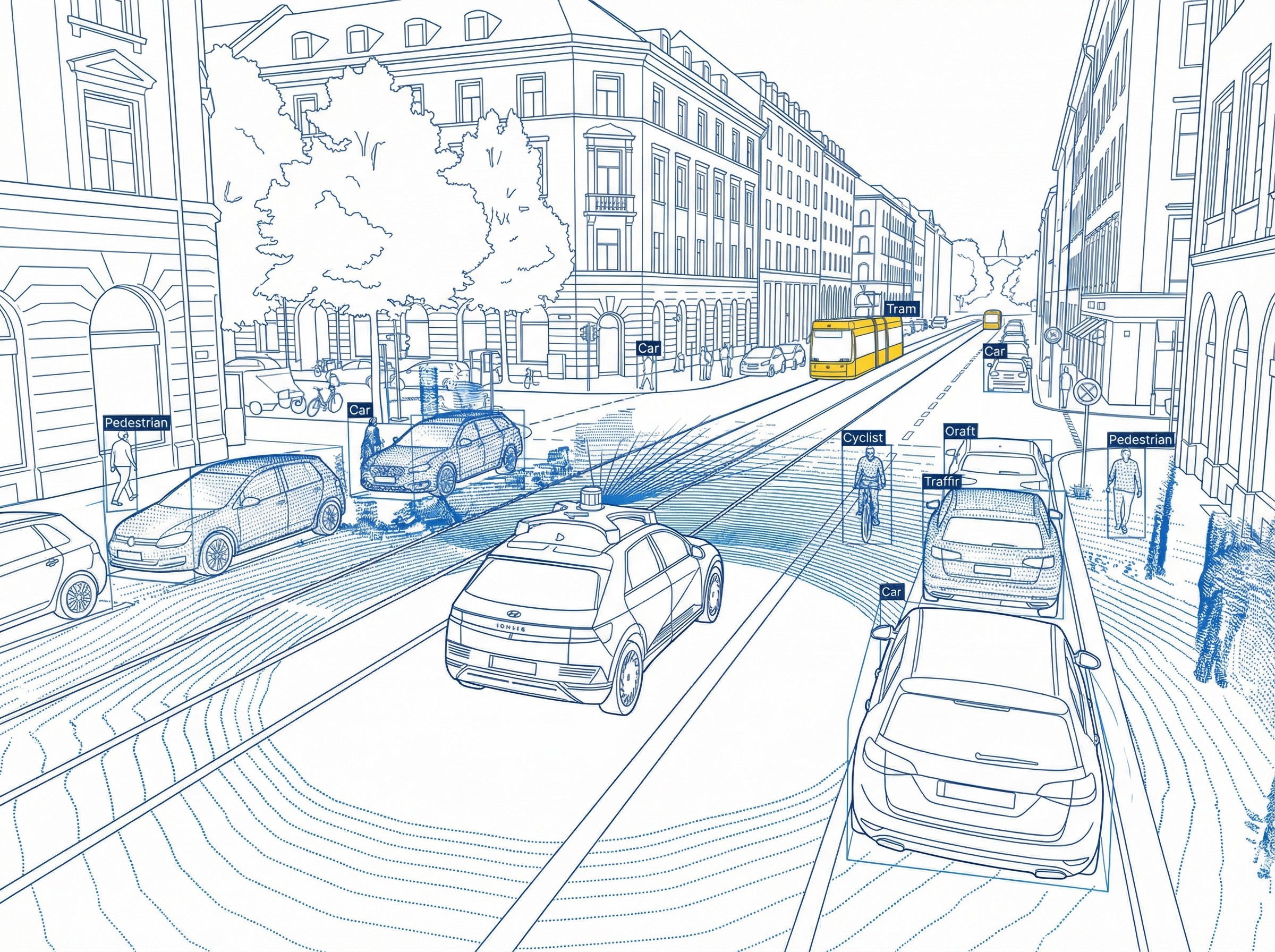

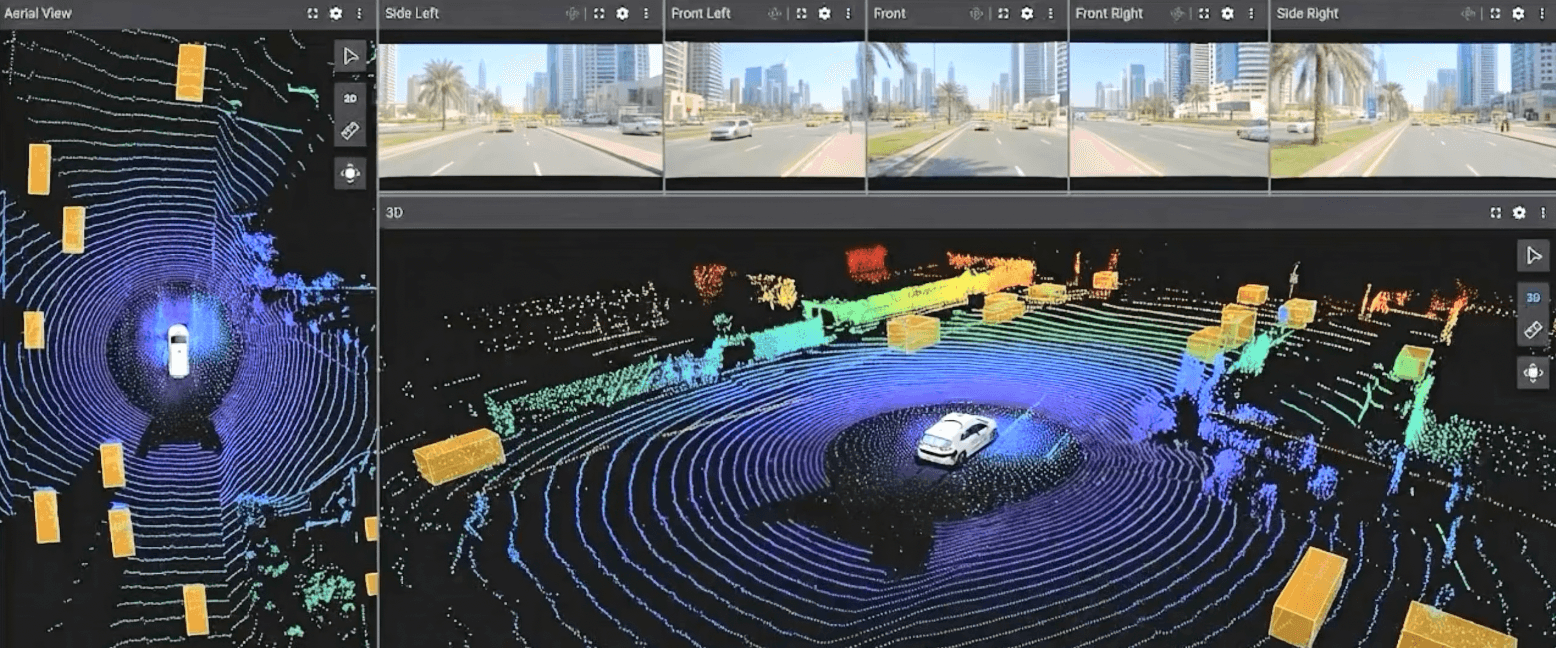

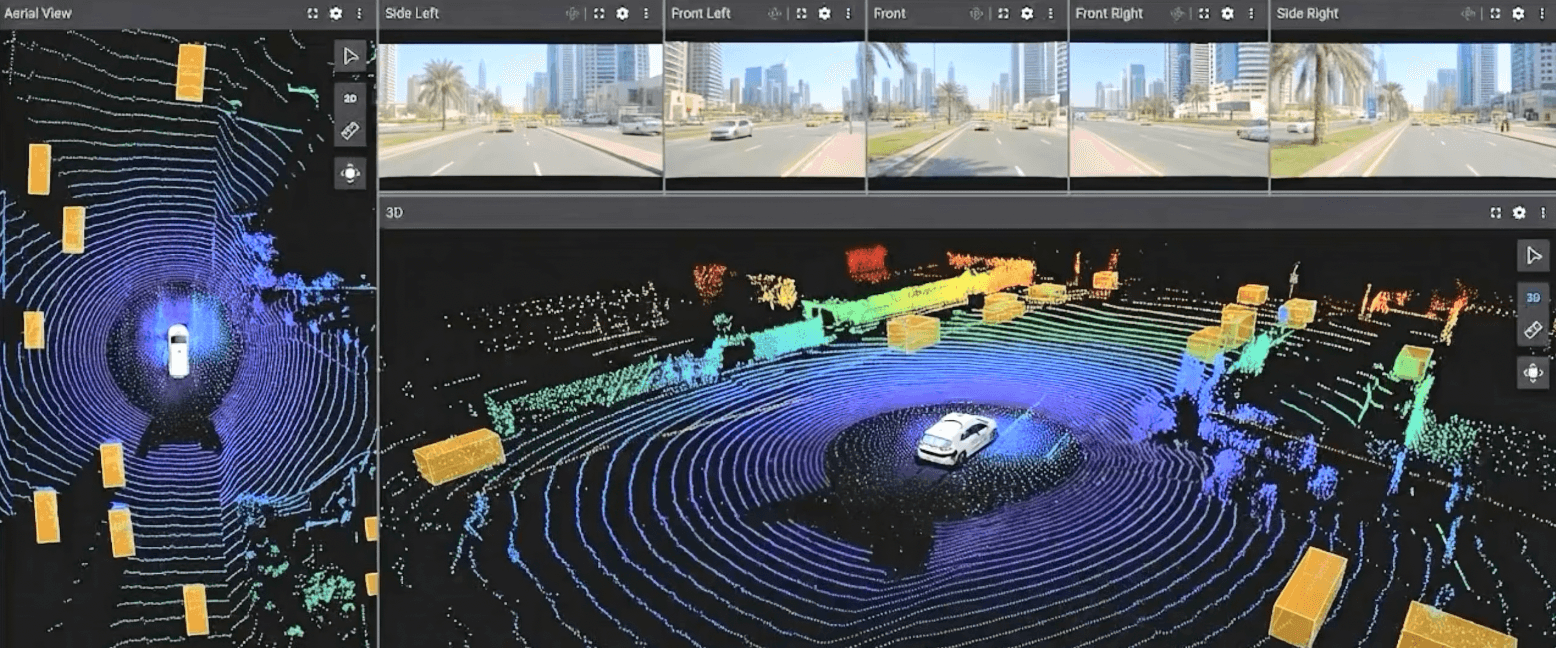

From Sensor Data to Deployment-Ready Perception

This visualization represents how raw sensor streams — LiDAR point clouds and multi-camera inputs — are transformed into validated, deployment-ready perception outputs. It shows real-time object understanding, spatial consistency, and scene structure emerging from synchronized sensor data rather than from offline post-processing. The frame illustrates our core focus: real-time perception validation at the edge. By continuously evaluating perception outputs against live sensor data, this system enables faster iteration, earlier detection of failure modes, and reliable transition from simulation to real-world operation across diverse urban environments.

Real-time perception validation at the edge

This view represents a live perception validation platform used to observe, verify, and stress-test production-grade LiDAR and camera perception systems directly in real-world environments. The overlay illustrates how objects, geometry, and semantics are detected and validated in real time, enabling continuous feedback on perception quality, failure modes, and system confidence. By moving annotation and validation closer to the vehicle — at the edge — ReDrive enables faster iteration, earlier detection of perception weaknesses, and safer deployment across complex urban environments. This bridges the gap between simulation, offline datasets, and real-world operation.

Real-Time Perception Validation Testbed (MVP)

This screen capture shows our active MVP used to validate real-time perception pipelines on a live, interactive system. A CARLA vehicle is rendered on a remote GPU and controlled through a browser-based interface, enabling synchronized multi-camera video streaming, telemetry exchange, and perception feedback under real-time constraints. Rather than focusing on remote driving as an end product, this MVP serves as a testbed for real-time perception validation: measuring latency, sensor synchronization, data flow stability, and system responsiveness. It allows rapid iteration on perception logic, annotation pipelines, and edge-compute workflows before deployment to real vehicles and public infrastructure.

Real-time perception validation at the edge

This view represents a live perception validation platform used to observe, verify, and stress-test production-grade LiDAR and camera perception systems directly in real-world environments. The overlay illustrates how objects, geometry, and semantics are detected and validated in real time, enabling continuous feedback on perception quality, failure modes, and system confidence. By moving annotation and validation closer to the vehicle — at the edge — ReDrive enables faster iteration, earlier detection of perception weaknesses, and safer deployment across complex urban environments. This bridges the gap between simulation, offline datasets, and real-world operation.

Real-Time Perception Validation Testbed (MVP)

This screen capture shows our active MVP used to validate real-time perception pipelines on a live, interactive system. A CARLA vehicle is rendered on a remote GPU and controlled through a browser-based interface, enabling synchronized multi-camera video streaming, telemetry exchange, and perception feedback under real-time constraints. Rather than focusing on remote driving as an end product, this MVP serves as a testbed for real-time perception validation: measuring latency, sensor synchronization, data flow stability, and system responsiveness. It allows rapid iteration on perception logic, annotation pipelines, and edge-compute workflows before deployment to real vehicles and public infrastructure.

From Sensor Data to Deployment-Ready Perception

This visualization represents how raw sensor streams — LiDAR point clouds and multi-camera inputs — are transformed into validated, deployment-ready perception outputs. It shows real-time object understanding, spatial consistency, and scene structure emerging from synchronized sensor data rather than from offline post-processing. The frame illustrates our core focus: real-time perception validation at the edge. By continuously evaluating perception outputs against live sensor data, this system enables faster iteration, earlier detection of failure modes, and reliable transition from simulation to real-world operation across diverse urban environments.

Real-Time Perception Validation Testbed (MVP)

This screen capture shows our active MVP used to validate real-time perception pipelines on a live, interactive system. A CARLA vehicle is rendered on a remote GPU and controlled through a browser-based interface, enabling synchronized multi-camera video streaming, telemetry exchange, and perception feedback under real-time constraints. Rather than focusing on remote driving as an end product, this MVP serves as a testbed for real-time perception validation: measuring latency, sensor synchronization, data flow stability, and system responsiveness. It allows rapid iteration on perception logic, annotation pipelines, and edge-compute workflows before deployment to real vehicles and public infrastructure.

From Sensor Data to Deployment-Ready Perception

This visualization represents how raw sensor streams — LiDAR point clouds and multi-camera inputs — are transformed into validated, deployment-ready perception outputs. It shows real-time object understanding, spatial consistency, and scene structure emerging from synchronized sensor data rather than from offline post-processing. The frame illustrates our core focus: real-time perception validation at the edge. By continuously evaluating perception outputs against live sensor data, this system enables faster iteration, earlier detection of failure modes, and reliable transition from simulation to real-world operation across diverse urban environments.

Solution

What We Offer

A modular, real-time perception validation stack designed to continuously evaluate, stress-test, and improve LiDAR- and camera-based perception systems under real operating conditions — from development to scalable deployment.

About the Founder

Advisory & Academic Collaboration

I am currently engaging with academic researchers and industry practitioners in the fields of autonomous systems, perception, and data-driven validation. These discussions include ongoing review of our technical architecture, white paper, and domain-adaptation approach, as well as guidance on research direction, validation methodology, and real-world deployment challenges. Discussions include potential advisory roles with equity-based participation, subject to institutional approval and formal agreements.

Advisory & Academic Collaboration

I am currently engaging with academic researchers and industry practitioners in the fields of autonomous systems, perception, and data-driven validation. These discussions include ongoing review of our technical architecture, white paper, and domain-adaptation approach, as well as guidance on research direction, validation methodology, and real-world deployment challenges. Discussions include potential advisory roles with equity-based participation, subject to institutional approval and formal agreements.

Engineering Real-Time Perception Infrastructure for Autonomous Systems

Y+

Y+

Y+

5+ years across autonomous systems engineering, perception validation, simulation, and real-time teleoperation.

Advisory & Academic Collaboration

I am currently engaging with academic researchers and industry practitioners in the fields of autonomous systems, perception, and data-driven validation. These discussions include ongoing review of our technical architecture, white paper, and domain-adaptation approach, as well as guidance on research direction, validation methodology, and real-world deployment challenges. Discussions include potential advisory roles with equity-based participation, subject to institutional approval and formal agreements.

Advisory & Academic Collaboration

I am currently engaging with academic researchers and industry practitioners in the fields of autonomous systems, perception, and data-driven validation. These discussions include ongoing review of our technical architecture, white paper, and domain-adaptation approach, as well as guidance on research direction, validation methodology, and real-world deployment challenges. Discussions include potential advisory roles with equity-based participation, subject to institutional approval and formal agreements.

With a background spanning autonomous vehicles, perception systems, and real-time tele-operation platforms, my work focuses on the intersection of on-road data, edge computing, and perception validation. I’ve worked across the full lifecycle of autonomous systems — from simulation and test tooling to live vehicle operations and safety-critical validation workflows.

My experience includes building and operating real-time data pipelines for LiDAR and multi-camera systems, developing tele-operation and monitoring interfaces, and contributing to system-level V&V frameworks used to evaluate perception robustness under real-world conditions. This includes exposure to certification-oriented testing methodologies, large-scale test campaigns, and infrastructure designed for continuous data capture and analysis.

Through ReDrive Systems, I’m focused on building a practical, scalable perception validation platform — one that enables engineering teams to identify perception failures as they happen, iterate faster, and deploy with confidence across diverse urban environments. The goal is not just better datasets, but continuous, real-world feedback loops that bridge simulation, edge validation, and live operation.

Advisory & Academic Collaboration

I am currently engaging with academic researchers and industry practitioners in the fields of autonomous systems, perception, and data-driven validation. These discussions include ongoing review of our technical architecture, white paper, and domain-adaptation approach, as well as guidance on research direction, validation methodology, and real-world deployment challenges. Discussions include potential advisory roles with equity-based participation, subject to institutional approval and formal agreements.

Advisory & Academic Collaboration

I am currently engaging with academic researchers and industry practitioners in the fields of autonomous systems, perception, and data-driven validation. These discussions include ongoing review of our technical architecture, white paper, and domain-adaptation approach, as well as guidance on research direction, validation methodology, and real-world deployment challenges. Discussions include potential advisory roles with equity-based participation, subject to institutional approval and formal agreements.

Who we are & what we do

[The Mission] We build real-time, domain-adaptive perception validation infrastructure that helps autonomous systems move from controlled development to continuous real-world operation. Our focus is on reducing perception uncertainty, validation latency, and deployment risk by exposing failures directly at the edge — where vehicles operate — rather than relying solely on offline datasets or delayed annotation pipelines.

Who we are & what we do

[The Mission] We build real-time, domain-adaptive perception validation infrastructure that helps autonomous systems move from controlled development to continuous real-world operation. Our focus is on reducing perception uncertainty, validation latency, and deployment risk by exposing failures directly at the edge — where vehicles operate — rather than relying solely on offline datasets or delayed annotation pipelines.

Who we are & what we do

[The Mission] We build real-time, domain-adaptive perception validation infrastructure that helps autonomous systems move from controlled development to continuous real-world operation. Our focus is on reducing perception uncertainty, validation latency, and deployment risk by exposing failures directly at the edge — where vehicles operate — rather than relying solely on offline datasets or delayed annotation pipelines.

What we build

[The Platform] An open-core, modular platform for real-time perception validation. We validate LiDAR and camera perception directly on edge-equipped vehicles, exposing failures as they occur. By integrating with existing fleets — including cars, buses, and trams — we enable continuous, cost-efficient data capture in live urban environments. Validated data is transformed into simulation-ready assets for faster iteration and safer deployment.

What we build

[The Platform] An open-core, modular platform for real-time perception validation. We validate LiDAR and camera perception directly on edge-equipped vehicles, exposing failures as they occur. By integrating with existing fleets — including cars, buses, and trams — we enable continuous, cost-efficient data capture in live urban environments. Validated data is transformed into simulation-ready assets for faster iteration and safer deployment.

What we build

[The Platform] An open-core, modular platform for real-time perception validation. We validate LiDAR and camera perception directly on edge-equipped vehicles, exposing failures as they occur. By integrating with existing fleets — including cars, buses, and trams — we enable continuous, cost-efficient data capture in live urban environments. Validated data is transformed into simulation-ready assets for faster iteration and safer deployment.

Partner with us

[The Collaboration Model] We work with mobility operators, cities, public transport authorities, OEMs, and autonomy developers to integrate perception validation directly into operating fleets. By aligning engineering, infrastructure, and regulatory stakeholders, we enable compliant, city-scale validation while creating shared value from continuously captured real-world data. Our partnerships are designed to support long-term deployment, not one-off test campaigns.

Partner with us

[The Collaboration Model] We work with mobility operators, cities, public transport authorities, OEMs, and autonomy developers to integrate perception validation directly into operating fleets. By aligning engineering, infrastructure, and regulatory stakeholders, we enable compliant, city-scale validation while creating shared value from continuously captured real-world data. Our partnerships are designed to support long-term deployment, not one-off test campaigns.

Partner with us

[The Collaboration Model] We work with mobility operators, cities, public transport authorities, OEMs, and autonomy developers to integrate perception validation directly into operating fleets. By aligning engineering, infrastructure, and regulatory stakeholders, we enable compliant, city-scale validation while creating shared value from continuously captured real-world data. Our partnerships are designed to support long-term deployment, not one-off test campaigns.

Build the Infrastructure for Real-World Autonomous Deployment

We build an open-core perception validation platform that helps autonomous systems move from development to real-world operation. By validating LiDAR and camera perception directly in live environments, we reduce deployment risk, shorten iteration cycles, and accelerate safe market entry.